Fujitsu high-end storage enters 10 million IOPS era (SPC-1 v3), surpassing Huawei.

Recently, Chinese manufacturers have released new high-end storage products with capacity of one EB above and performance of 100 million IOPS. However, no test results have been published, so I generally call such products "PPT storage".

There is a small third-party organization in the industry to measure storage performance.

Although there are many problems, Storage Performance Council is relatively famous, especially in China. Thanks to Huawei's strong participation, TOP10 of storage products are basically produced by Huawei.

However, this record has recently been broken by Fujitsu. Fujitsu not only won the battle, but also improved its performance to 10 million IPOS, when the highest IPOS of high-end storage in Huawei is 7 million.

Therefore, now storage product with the best performance is from Fujitsu, but the latter from two to six are produced by Huawei.

Of course, Fujitsu's high-end storage product is the most expensive.

Because it is too expensive, it is not as good as Huawei in terms of cost-effectiveness.

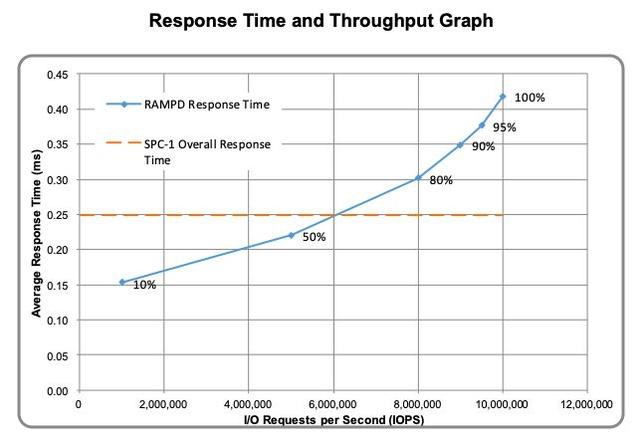

The delay of Fujitsu's high-end storage product is well controlled. The delay of tens of millions IOPs is less than 0.5 ms.

DX8900 S4 high-end storage is equipped with 24 controllers (12 control nodes), 576 SAS SSD 400G SSDs, and connects 44 servers through 16G FC.

NVMe technology is not used in DX8900 S4 high-end storage, let alone NVMe-oF. As a result, it is estimated that the world record will not be kept for long. When Dorado 18000 NVMe comes out, it will be no big problem for Huawei to win the championship again.

Of course, with the use of RAID 1, plus metadata and other overhead, measuring performance contributes to yield less than 40%.

Although high-end storage has entered tens of millions of IOPS era, I don’t pay more attention to traditional high-end storage, instead of currently learning open source storage and SDS. After reading Ceph's books, I think that ZTE's book is written better which describes large amount of principles rather than codes, unlike other books. So I recommend it to people who are transformed from traditional storage like me.

However, I find it very difficult to switch from traditional storage to open source. I am still not quite sure about the mechanism of Monitor and OSD from Ceph, such as how to extend cluster deployment. These details are not found in ZTE's book. If someone has experience in deploying CEPH extension cluster. We hope to write a book on best practices of Ceph’s extension cluster.